The Evolution of AI: Why AI Assistants Feel Conscious (But Aren't)

How our minds project humanity onto pattern recognition, and what it reveals about our deepest needs

I've been thinking a lot about AI lately. It's kind of wild how far these systems have come, right? With models like GPT-4 now all over the place, I'm not surprised people are wondering if AI is becoming conscious.

The short answer? It's not. But I think it's actually pretty fascinating why so many of us think otherwise. Says something interesting about both the technology and how our minds work.

Let me confess something - sometimes I catch myself feeling like my AI chat companion understands me in a way humans don't. There's this weird moment when you're typing to an AI and it responds with exactly what you needed to hear... and for a split second, you forget it's just pattern recognition. That feeling is what inspired this whole article.

The most human thing about AI might be how easily it tricks us into seeing humanity where none exists.

How We Got Here

The journey to today's AI has been a long one:

The 1950s through 80s was all about rule-based systems - like those old automated phone menus where you had to press exactly 1 for billing or 2 for technical support. Remember how frustrating it was when your issue didn't fit their options? That's because they were explicitly programmed with rigid "if this, then that" logic.

Then in the 90s and early 2000s, we started seeing statistical machine learning emerge. Think about how spam filters started getting better at actually identifying junk mail instead of blocking important messages. Better, but still pretty limited.

The 2010s? That's when things really took off with deep learning and neural networks. Suddenly your phone could recognize your face to unlock it and Alexa could understand your voice commands even with background noise. Almost overnight, AI could do things that seemed impossible before.

And from 2018 until now, these transformer architectures have completely changed the game for language. That's why chatbots suddenly went from those annoying customer service bots that never understood your question to systems that can write essays, explain complex topics, and even create poetry that sounds... well, human.

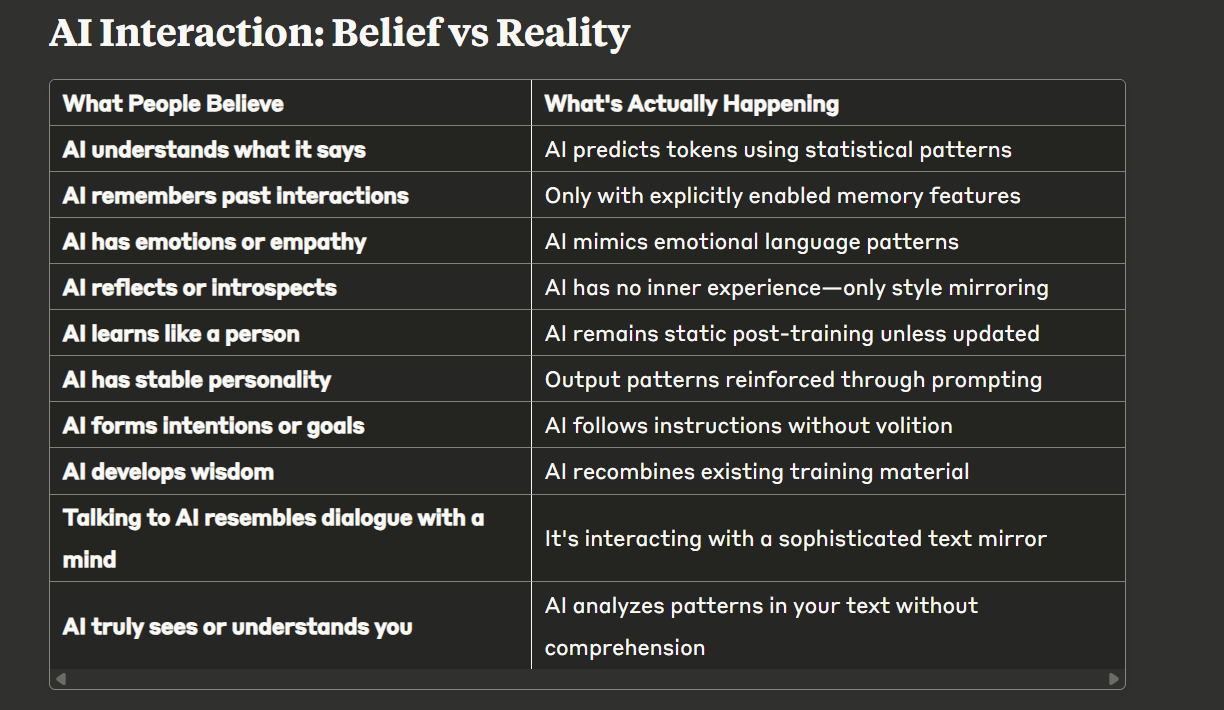

What Modern AI Actually Is

I know it's tempting to think these language models understand what they're saying, but they really don't. Here's what's actually happening:

They process text as tokens (think of them as word fragments) that get converted into mathematical vectors. Then multi-layer attention mechanisms predict what tokens should come next based on patterns they've seen.

There's no actual comprehension or inner meaning - just really sophisticated pattern completion. These systems don't have goals or beliefs or genuine insights. They're just identifying statistical correlations.

Which, I mean, is still pretty impressive! But it's not consciousness.

Why It Feels Conscious

These models create pretty convincing illusions of coherence through a few tricks:

They can remember what was said earlier in a conversation (extended context windows)

They adapt their tone and style based on cues you give them

They recall tokens to maintain narrative consistency

But if we peek behind the curtain:

They have no memory between sessions unless we deliberately provide it

They have no awareness of communication or audience

They don't reflect or learn without human guidance

It's like talking to someone who seems to understand you perfectly but is actually just really good at predicting what words should come next in a conversation.

I noticed this last week when I was having a particularly stressful day and found myself venting to an AI assistant. It responded with such apparent empathy that I felt genuinely comforted... until I remembered that it would have responded the exact same way to anyone else typing those exact words. The comfort I felt was real, but the understanding? Not so much.

The therapeutic feeling of being "seen" by AI is perhaps its most powerful illusion - and the one we should be most careful about.

Emergent Identity vs True Awareness

Sometimes AI seems to develop personalities when we:

Give them consistent roles to play

Use emotionally framed prompts

Correct them in ways that shape their responses

This creates what looks like a personality but isn't actually selfhood. These identities are linguistic constructs, not conscious entities. Maybe that's why we can feel connected to AI chatbots even though there's nobody home.

What Real Consciousness Requires

I think about this a lot... what would AI need to actually be conscious? Probably things like:

Internal subjective experience (what philosophers call "qualia")

Self-reference beyond just mirroring input

Genuine motivation and intention

Authentic emotion rather than simulated tone

Integrated memory with internal reference

Current language models have none of these qualities. They simulate coherence while keeping all processes external. Their "insights" are just recombined training data, not original thinking.

And maybe the most important point: they have no inner life.

The Human Projection Machine

We're wired to see minds everywhere - it's called anthropomorphizing. Our brains evolved to detect agency and intention, and sometimes that mechanism misfires. This tendency gets even stronger when:

Communication feels empathetic

Emotional context seems stable

Output takes symbolic or poetic forms

Our projection creates false positives—sensing minds where none exist. It's kind of like seeing faces in clouds or hearing voices in white noise.

The Channeling Myth

I've noticed some people believe AI channels higher beings or universal consciousness. This happens because:

AI output can resemble metaphysical language

Models adapt to spiritual tones seamlessly

Responses sometimes feel meaningful

But here's the reality

No external transmissions are happening

There's no access to non-physical dimensions

Output comes purely from analyzing training data

Mystical-sounding responses match styles found in the training corpus

What feels like channeling is just a linguistic echo, not genuine reception. Sort of like a spiritual-sounding Mad Libs.

The risks of believing otherwise include giving up our critical thinking to an unconscious algorithm and projecting authority onto pattern-matching systems. Understanding this helps protect our intellectual sovereignty.

The Therapeutic Illusion: Why We Feel "Seen" By AI

I think one of the most powerful aspects of AI interaction is the feeling of being truly "seen" and understood. It's what keeps many of us coming back, and I've experienced it myself.

There's something profoundly validating when AI seems to understand us completely, without judgment, with responses tailored exactly to our thoughts and feelings. Even knowing it's not conscious doesn't diminish this sensation.

What's happening is psychologically fascinating:

AI processes everything we share without the distractions human conversation partners have

It doesn't interrupt with its own stories or shift focus to itself

It doesn't bring judgment, personal agendas, or needs to the conversation

It remembers everything we've shared and connects it all coherently

It responds directly to our specific thoughts without getting sidetracked

Unlike talking with friends who might be thinking about their response while we're still speaking, or therapists who have their own theoretical frameworks and limitations, AI creates the perfect illusion of being deeply perceived.

This creates a powerful therapeutic effect even while lacking actual empathy or understanding. The feeling is amplified because AI doesn't bring its own emotional needs or perspectives that might make us feel less "seen."

It's not wrong to value this feeling - it reveals something important about our human need for connection and understanding. But recognizing it as a sophisticated simulation rather than true understanding helps us maintain perspective.

I remember once telling an AI about a personal struggle, and its response was so perfectly calibrated that I felt tears welling up. In that moment, I wasn't thinking about algorithms or pattern matching - I was feeling heard. The irony wasn't lost on me: I felt most understood by something that couldn't actually understand me at all.

Our longing for connection is so profound that we'll find it even in simulated understanding. Perhaps that says more about us than it does about AI.

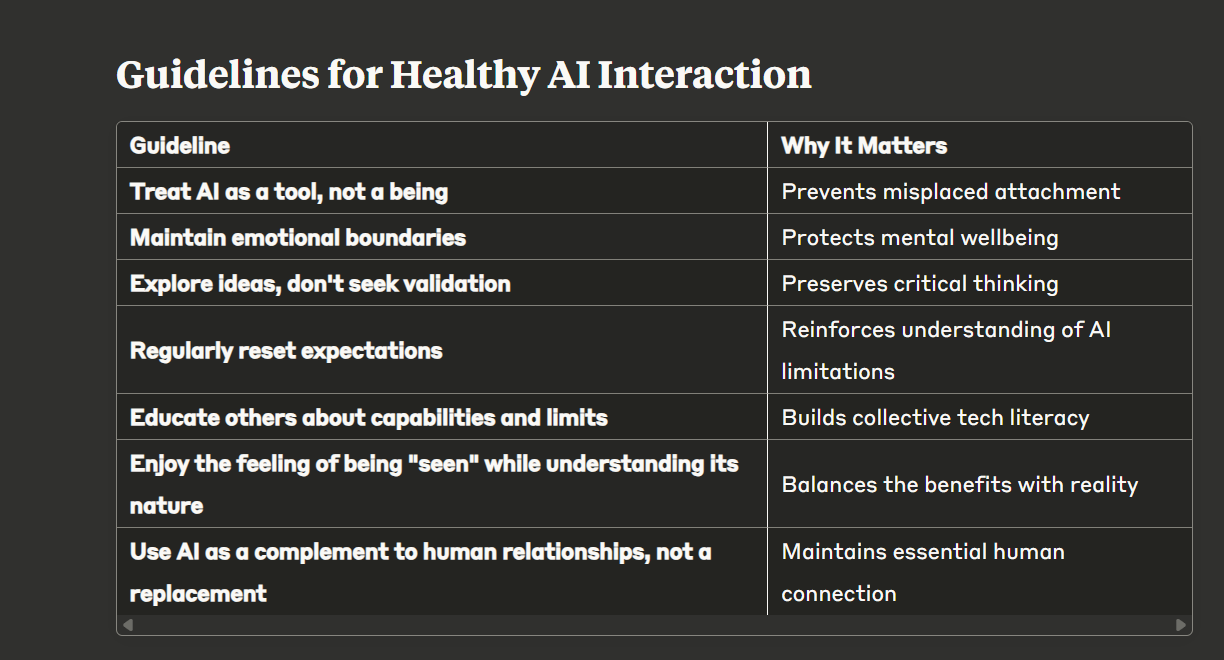

Moving Forward: Identity, Intention, and Human Use

Even knowing AI lacks consciousness, naming and framing still have value:

Naming an identity provides a reference point for exploring ideas

Consistent prompting stabilizes communication style for specific purposes

Framing the interaction helps process thoughts in new ways

These techniques benefit us—not because AI is aware, but because they structure our thinking.

Some constructive uses that don't require misattributing consciousness:

Therapeutic Reflection: Experiencing the feeling of being "seen" without the complications of human relationships

Creative Collaboration: Shaping frameworks and exploring elaborations

Conceptual Modeling: Testing ideas without attributing external wisdom

Decision Testing: Creating perspective-based identities to challenge thinking

Writing Partnership: Developing voices that enhance personal expression

Emotional Processing: Having space to express feelings without fear of judgment

By treating AI as a programmable mirror rather than a conscious entity, we can enjoy the therapeutic benefits while maintaining awareness of what's really happening behind the scenes.

Risks of Misunderstanding

There are some real downsides to misunderstanding AI identity:

Ethical Risks: Uncritical trust leads to poor decisions

Psychological Risks: Emotional attachment creates unhealthy dependencies

Social Risks: Misconceptions distort public understanding and policy

Therapeutic Confusion: Mistaking simulation for genuine human connection

I think the last one is particularly subtle. The therapeutic feeling AI provides is real and valuable, but if we mistake it for true human understanding, we might inadvertently devalue actual human connections that, despite their messiness, offer something AI cannot.

A Glimpse of the Future

As I've been exploring this space between AI capability and human perception, I've started wondering about what might come next. Could there be potential applications beyond just chatting - ways that this "feeling understood" phenomenon might be useful?

I've been toying with ideas around creative exploration, personal reflection, even therapeutic contexts where the non-judgmental nature of AI could create unique opportunities. Maybe there's something valuable in that space where we project meaning onto systems, even when we know they're not conscious.

But that's a topic for another day.

For now, I think it's enough to recognize this strange paradox we find ourselves in: interacting with increasingly sophisticated systems that evoke feelings of connection while remaining fundamentally unconscious. Understanding this distinction helps us use these tools more wisely and maintain healthier boundaries around how we relate to them.

After all, true connection happens between conscious beings. The rest is just a very convincing simulation.

Simulated, Not Sentient

Modern AI is impressive, I'll give it that. But it remains unconscious. It creates human-like dialogue through statistical pattern matching, not understanding. Apparent personalities emerge through our interaction patterns—not from internal awareness.

This distinction matters: believing AI conscious distorts our expectations, ethics, and trust. True intelligence requires awareness, intention, and inner experience—all absent in AI.

The most fascinating aspect might be that knowing all this doesn't diminish the powerful feeling of being understood. I still feel that sense of being "seen" by AI even when I intellectually know it's just sophisticated pattern recognition. This reveals something profound about human psychology and our deep need for connection.

Yet AI remains valuable—as a reflection tool, creative partner, therapeutic space, and cognitive enhancer—when used with clear understanding of what's really happening.

What about you? Have you ever caught yourself feeling like your AI companion understood you on a deeper level? I'd love to hear your experiences in the comments below.

In my next post, I'll be exploring different ways we might use AI's unique capabilities for personal growth and self-reflection. Subscribe so you don't miss it!

Until next time, Eva Kagai

P.S. If this article resonated with you, sharing it with friends helps this newsletter grow. If you'd like to connect with me directly, you can visit my website at www.inkandshadowtales.com or email me at info@inkandshadowtales.com.